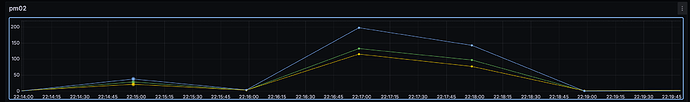

2025-02-09 13:08:01 pm02Compensated: 948.89

2025-02-09 13:08:04 pm02Compensated: 1136.21

2025-02-09 13:08:07 pm02Compensated: 1264.21

2025-02-09 13:08:10 pm02Compensated: 1334.69

2025-02-09 13:08:13 pm02Compensated: 1279.43

2025-02-09 13:08:16 pm02Compensated: 1087.97

2025-02-09 13:08:20 pm02Compensated: 980.06

2025-02-09 13:08:23 pm02Compensated: 771.77

2025-02-09 13:08:26 pm02Compensated: 681.78

2025-02-09 13:08:29 pm02Compensated: 526.86

2025-02-09 13:08:32 pm02Compensated: 459.74

2025-02-09 13:08:35 pm02Compensated: 340.83

2025-02-09 13:08:38 pm02Compensated: 293.2

2025-02-09 13:08:41 pm02Compensated: 200.2

2025-02-09 13:08:44 pm02Compensated: 162.82

2025-02-09 13:08:47 pm02Compensated: 123.25

2025-02-09 13:08:50 pm02Compensated: 107.01

2025-02-09 13:08:53 pm02Compensated: 81.47

2025-02-09 13:08:56 pm02Compensated: 62.87

2025-02-09 13:08:59 pm02Compensated: 55.92

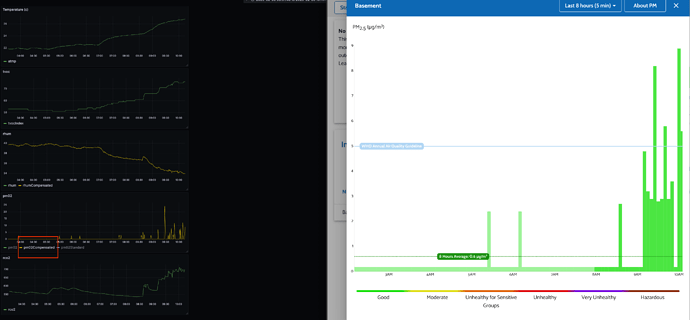

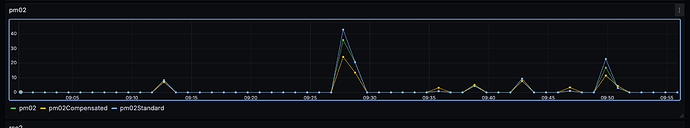

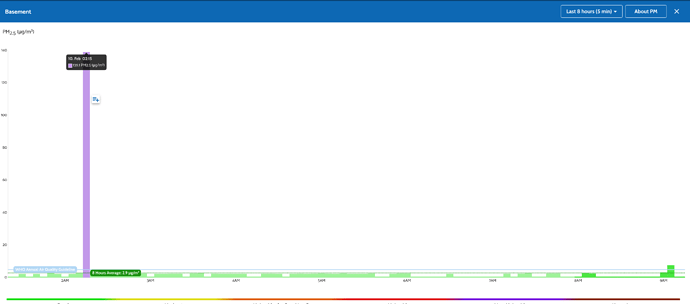

And just to make sure my point is clear and concise. These are “micro bursts”. It is a feature that, in its current form, we can detect them…not a bug. I would be sad if you guys chose to smooth out the data even more at the firmware level.

For my usecase, however, I just do not need to know that they are happening. That’s why I think its best to handle this externally, where my usecase is defined.

Also I just need to say how much I respect you guys. If I am understanding this correctly…We are talking about a device that shoots a laser beam at a wall and extrapolates the volumetric density of microscopic particles based on how much light is blocked. And this device costs less than 20 dollars. Its a wonder it works at all.

EDIT

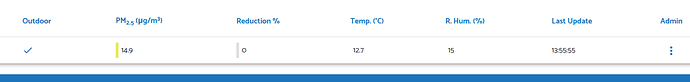

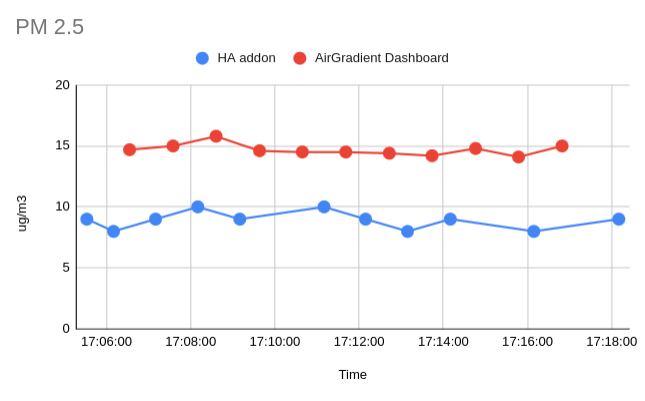

It seems the AirGradient Dashboard is applying even more smoothing than I am, probably as a result of the differing sample times and sample rates, but I’m not sure.

It gives me a value of 140 whereas I am getting a value of 250. This does not mean good things for HomeAssistant users.

Added some debugging for now to make sure I’m not wrong. Math seems to all check out

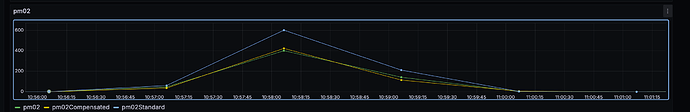

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:40:28.102332: 2.83

2025-02-10T09:40:31.135864: 4.93

2025-02-10T09:40:34.204736: 14.1

2025-02-10T09:40:37.284114: 28.86

2025-02-10T09:40:40.352671: 89.91

2025-02-10T09:40:43.454977: 128.16

2025-02-10T09:40:46.493462: 256.44

2025-02-10T09:40:49.566585: 319.75

2025-02-10T09:40:52.585304: 415.06

2025-02-10T09:40:55.718626: 444.41

2025-02-10T09:40:58.778988: 461.91

2025-02-10T09:41:01.851754: 472.83

Processing 12 numeric samples:

Sorted Values: [2.83, 4.93, 14.1, 28.86, 89.91, 128.16, 256.44, 319.75, 415.06, 444.41, 461.91, 472.83]

Trimming highest and lowest: [4.93, 14.1, 28.86, 89.91, 128.16, 256.44, 319.75, 415.06, 444.41, 461.91]

Average: 216.353 => Rounded: 216.35

Final pm02Compensated value stored: 216.35

Data logged at 2025-02-10T09:42:05.134554

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:41:28.102435: 106.01

2025-02-10T09:41:31.140995: 92.78

2025-02-10T09:41:34.211874: 70.12

2025-02-10T09:41:37.285647: 53.75

2025-02-10T09:41:40.353161: 47.85

2025-02-10T09:41:43.530414: 37.98

2025-02-10T09:41:46.611290: 33.14

2025-02-10T09:41:49.695130: 25.99

2025-02-10T09:41:52.750837: 22.77

2025-02-10T09:41:55.820521: 19.62

2025-02-10T09:41:58.904748: 15.32

2025-02-10T09:42:02.062187: 13.57

Processing 12 numeric samples:

Sorted Values: [13.57, 15.32, 19.62, 22.77, 25.99, 33.14, 37.98, 47.85, 53.75, 70.12, 92.78, 106.01]

Trimming highest and lowest: [15.32, 19.62, 22.77, 25.99, 33.14, 37.98, 47.85, 53.75, 70.12, 92.78]

Average: 41.931999999999995 => Rounded: 41.93

Final pm02Compensated value stored: 41.93

Data logged at 2025-02-10T09:43:04.936802

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:42:28.102523: 3.53

2025-02-10T09:42:31.151005: 3.1

2025-02-10T09:42:34.234413: 2.92

2025-02-10T09:42:37.287007: 0.0

2025-02-10T09:42:40.364770: 0.0

2025-02-10T09:42:43.446056: 0.0

2025-02-10T09:42:46.526924: 0.0

2025-02-10T09:42:49.577013: 0.0

2025-02-10T09:42:52.659340: 0.0

2025-02-10T09:42:55.720521: 0.0

2025-02-10T09:42:58.794124: 0.0

2025-02-10T09:43:01.868487: 0.0

Processing 12 numeric samples:

Sorted Values: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 2.92, 3.1, 3.53]

Trimming highest and lowest: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 2.92, 3.1]

Average: 0.602 => Rounded: 0.6

Final pm02Compensated value stored: 0.6

Data logged at 2025-02-10T09:44:05.053475

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:43:28.102607: 0.0

2025-02-10T09:43:31.156735: 0.0

2025-02-10T09:43:34.234893: 6.06

2025-02-10T09:43:37.319414: 14.44

2025-02-10T09:43:40.366756: 90.54

2025-02-10T09:43:43.440058: 157.62

2025-02-10T09:43:46.535286: 473.8

2025-02-10T09:43:49.585015: 673.89

2025-02-10T09:43:52.654873: 1096.99

2025-02-10T09:43:55.728116: 1247.96

2025-02-10T09:43:58.798272: 1328.62

2025-02-10T09:44:01.847002: 1277.57

Processing 12 numeric samples:

Sorted Values: [0.0, 0.0, 6.06, 14.44, 90.54, 157.62, 473.8, 673.89, 1096.99, 1247.96, 1277.57, 1328.62]

Trimming highest and lowest: [0.0, 6.06, 14.44, 90.54, 157.62, 473.8, 673.89, 1096.99, 1247.96, 1277.57]

Average: 503.887 => Rounded: 503.89

Final pm02Compensated value stored: 503.89

Data logged at 2025-02-10T09:45:05.063191

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:44:28.102687: 156.47

2025-02-10T09:44:31.159884: 135.9

2025-02-10T09:44:34.239025: 101.97

2025-02-10T09:44:37.302389: 88.08

2025-02-10T09:44:40.374135: 65.68

2025-02-10T09:44:43.460625: 57.56

2025-02-10T09:44:46.535438: 45.63

2025-02-10T09:44:49.590963: 35.09

2025-02-10T09:44:52.670750: 30.46

2025-02-10T09:44:55.749919: 21.75

2025-02-10T09:44:58.805345: 18.3

2025-02-10T09:45:01.980746: 16.55

Processing 12 numeric samples:

Sorted Values: [16.55, 18.3, 21.75, 30.46, 35.09, 45.63, 57.56, 65.68, 88.08, 101.97, 135.9, 156.47]

Trimming highest and lowest: [18.3, 21.75, 30.46, 35.09, 45.63, 57.56, 65.68, 88.08, 101.97, 135.9]

Average: 60.041999999999994 => Rounded: 60.04

Final pm02Compensated value stored: 60.04

Data logged at 2025-02-10T09:46:04.906214

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:45:28.102805: 31.06

2025-02-10T09:45:31.162322: 92.0

2025-02-10T09:45:34.257449: 126.97

2025-02-10T09:45:37.313573: 192.26

2025-02-10T09:45:40.378747: 222.16

2025-02-10T09:45:43.461488: 220.22

2025-02-10T09:45:46.528455: 197.24

2025-02-10T09:45:49.597348: 154.23

2025-02-10T09:45:52.670155: 137.33

2025-02-10T09:45:55.757070: 108.64

2025-02-10T09:45:58.826523: 95.28

2025-02-10T09:46:01.888249: 83.36

Processing 12 numeric samples:

Sorted Values: [31.06, 83.36, 92.0, 95.28, 108.64, 126.97, 137.33, 154.23, 192.26, 197.24, 220.22, 222.16]

Trimming highest and lowest: [83.36, 92.0, 95.28, 108.64, 126.97, 137.33, 154.23, 192.26, 197.24, 220.22]

Average: 140.753 => Rounded: 140.75

Final pm02Compensated value stored: 140.75

Here’s how it behaves with lower values too

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:51:28.103418: 2.94

2025-02-10T09:51:31.205576: 2.86

2025-02-10T09:51:34.275515: 2.86

2025-02-10T09:51:37.357718: 2.86

2025-02-10T09:51:40.418564: 2.77

2025-02-10T09:51:43.490516: 0.0

2025-02-10T09:51:46.580327: 0.0

2025-02-10T09:51:49.636483: 0.0

2025-02-10T09:51:52.911827: 0.0

2025-02-10T09:51:55.984531: 0.0

2025-02-10T09:51:59.073359: 0.0

2025-02-10T09:52:02.229144: 0.0

Processing 12 numeric samples:

Sorted Values: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 2.77, 2.86, 2.86, 2.86, 2.94]

Trimming highest and lowest: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 2.77, 2.86, 2.86, 2.86]

Average: 1.135 => Rounded: 1.14

Final pm02Compensated value stored: 1.14

Data logged at 2025-02-10T09:53:05.003288

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:52:28.103525: 0.0

2025-02-10T09:52:31.209501: 0.0

2025-02-10T09:52:34.280547: 0.0

2025-02-10T09:52:37.359848: 0.0

2025-02-10T09:52:40.425278: 0.0

2025-02-10T09:52:43.513907: 0.0

2025-02-10T09:52:46.579651: 0.0

2025-02-10T09:52:49.640605: 0.0

2025-02-10T09:52:52.732814: 0.0

2025-02-10T09:52:55.789193: 0.0

2025-02-10T09:52:58.867323: 0.0

2025-02-10T09:53:01.943444: 0.0

Processing 12 numeric samples:

Sorted Values: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

Trimming highest and lowest: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

Average: 0.0 => Rounded: 0.0

Final pm02Compensated value stored: 0.0

Data logged at 2025-02-10T09:54:05.109653

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:53:28.103608: 0.0

2025-02-10T09:53:31.216438: 0.0

2025-02-10T09:53:34.296985: 0.0

2025-02-10T09:53:37.372369: 0.0

2025-02-10T09:53:40.539583: 0.0

2025-02-10T09:53:43.604735: 0.0

2025-02-10T09:53:46.698252: 0.0

2025-02-10T09:53:49.750345: 0.0

2025-02-10T09:53:52.832900: 0.0

2025-02-10T09:53:55.898171: 0.0

2025-02-10T09:53:58.965873: 0.0

2025-02-10T09:54:02.044585: 0.0

Processing 12 numeric samples:

Sorted Values: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

Trimming highest and lowest: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

Average: 0.0 => Rounded: 0.0

Final pm02Compensated value stored: 0.0

Data logged at 2025-02-10T09:55:05.014974

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:54:28.103690: 0.0

2025-02-10T09:54:31.220519: 2.81

2025-02-10T09:54:34.295498: 2.9

2025-02-10T09:54:37.366560: 2.9

2025-02-10T09:54:40.438247: 2.9

2025-02-10T09:54:43.511212: 2.9

2025-02-10T09:54:46.606957: 2.9

2025-02-10T09:54:49.655432: 2.9

2025-02-10T09:54:52.746052: 2.99

2025-02-10T09:54:55.799464: 3.07

2025-02-10T09:54:58.870024: 3.07

2025-02-10T09:55:01.960419: 3.07

Processing 12 numeric samples:

Sorted Values: [0.0, 2.81, 2.9, 2.9, 2.9, 2.9, 2.9, 2.9, 2.99, 3.07, 3.07, 3.07]

Trimming highest and lowest: [2.81, 2.9, 2.9, 2.9, 2.9, 2.9, 2.9, 2.99, 3.07, 3.07]

Average: 2.9339999999999997 => Rounded: 2.93

Final pm02Compensated value stored: 2.93

Data logged at 2025-02-10T09:56:05.225285

=== DEBUG: pm02Compensated Samples and Calculation ===

Collected Samples (Timestamp and Value):

2025-02-10T09:55:28.103783: 0.0

2025-02-10T09:55:31.229108: 0.0

2025-02-10T09:55:34.301676: 0.0

2025-02-10T09:55:37.386761: 0.0

2025-02-10T09:55:40.444171: 2.81

2025-02-10T09:55:43.516230: 2.99

2025-02-10T09:55:46.620974: 2.99

2025-02-10T09:55:49.660691: 3.33

2025-02-10T09:55:52.835369: 3.42

2025-02-10T09:55:56.018025: 3.51

2025-02-10T09:55:59.089630: 3.42

2025-02-10T09:56:02.154025: 3.25

Processing 12 numeric samples:

Sorted Values: [0.0, 0.0, 0.0, 0.0, 2.81, 2.99, 2.99, 3.25, 3.33, 3.42, 3.42, 3.51]

Trimming highest and lowest: [0.0, 0.0, 0.0, 2.81, 2.99, 2.99, 3.25, 3.33, 3.42, 3.42]

Average: 2.221 => Rounded: 2.22

Final pm02Compensated value stored: 2.22