Hello,

I’m writing here to start a discussion and keep a record on the accuracy of the correction formula for humidity in outdoor AG units.

Now that there are freezing temperatures at night, it is becoming increasingly obvious that the humidity correction formula isn’t quite spot-on, especially as the real conditions approach 100% RH. Furthermore, it does not seem to be wrong in a consistent direction. Rather, what I’m seeing is that through most of the range the corrected RH overestimates the real humidity above freezing temperatures and begins underestimating it as the real conditions approach RH 95+% at below zero. Since the humidity is in turn used to correct SGP41 readings, those then get slightly skewed as well.

There are a couple of indicators leading me to think that the correction is off, rather than that there are real differences in humidity between two sensors. First and foremost, I’m pretty close to a reference weather station (within 10km) and the results between the two rarely agree (although they do agree with my other sensors quite often). But this is not co-located, so I can’t really derive any proof from that. What I do have is a colocated SHT45 that I use to calculate the specific humidity with. For instance: Now that it is freezing outdoors and all other sources are claiming RH=100% I’m seeing the AG under-report RH by a full 4%:

| Temperature °C | RH% | Dew Point °C | AG RH Raw % | AG RH% | AG Dew Point °C | SHT 45 RH% | SHT 45 Dew Point °C |

|---|---|---|---|---|---|---|---|

| -1.4 | 100 | -1.4 | 70.5 | 96.09 | -1.88 | 100% | -1.4 |

From hereon I’m going to use absolute (specific) humidity in g/kg for comparisons, as that’s the data I have easiest time wrapping my head around, and because for the most part it is a temperature-agnostic measurement (and so should come out to ~same even if two sensors measure a different RH% due to slight temperature differences; though again I’m seeing AG’s corrected temperature to be extremely accurate.)

In order to compute specific humidity from the temperature and RH% AG measures, I use the following formulae:

// t is the measured (corrected) temperature, p is atmospheric pressure, rh is relative humidity

const MH2O = 18.01534; // molar mass of water in g/mol

const Mdry = 28.9644; // molar mass of dry air in g/mol

const pwat = 6.107799961 + t * (4.436518521e-1 + t * (1.428945805e-2 + t * (2.650648471e-4 + t * (3.031240396e-6 + t * (2.034080948e-8 + t * 6.136820929e-11)))));

const pice = 6.109177956 + t * (5.034698970e-1 + t * (1.886013408e-2 + t * (4.176223716e-4 + t * (5.824720280e-6 + t * (4.838803174e-8 + t * 1.838826904e-10)))));

// H2O saturation pressure from Lowe & Ficke, 1974

const psat = min(pwat, pice);

const p_h2o = psat * rh / 100;

const vmr = p_h2o / p;

const specific_humidity = vmr * MH2O / (vmr * MH2O + (1.0 - vmr) * Mdry);

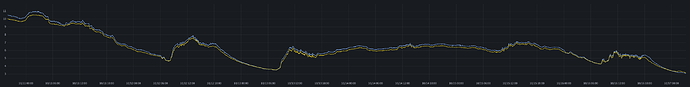

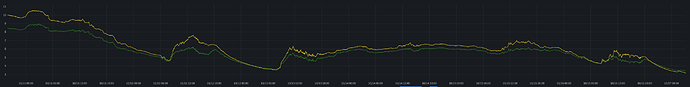

Without further ado, here’s a graph of humidity measurements from AG in blue and a co-located SHT45 in yellow:

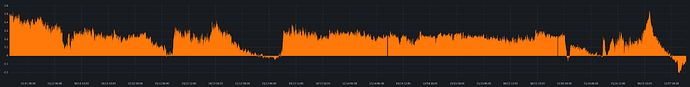

or in as a delta between the two lines:

In the delta graph the values above zero here indicate higher specific humidity (and thus in turn RH%) being reported than by my collocated “reference” and values below zero indicate lower RH%.

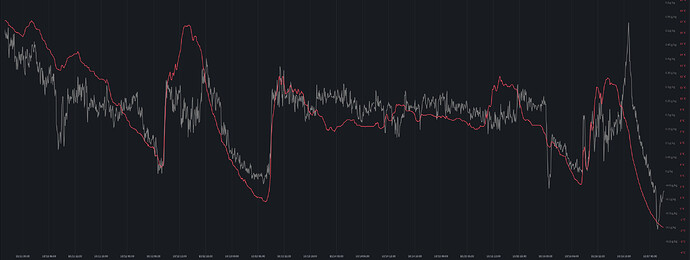

One insight I have is that these absolute humidity delta (gray) variations do appear to correlate well (to my eyeball) with temperature (red) changes and that the two sensors start agreeing on the humidity at around 3°C, but then there are also plenty of exceptions:

Frankly I have no idea what conclusions here are at this point and I have no suggestions as to how the humidity correction might be improved. But it is pretty clear that it may need to incorporate the temperature somehow.

(Background: O1-PST built from a kit, I’m using an ESPHome based firmware, using the published correction formulae)