Okay. A few things to share. I was tired of being “blind” and I don’t use company resources for personal projects, so I decided to finally pick up a scope for the home. I’m on a limited budget, but was able to get a Rigol MSO5000 series scope with the full bandwidth unlocked. Spec’d at 350MHz, but likely a bit higher according to others with the same scope. I’ve got a 6GHz RF generator coming, so I can test the actual bandwidth of the front-end, but it shouldn’t matter in this case as it’s more than good enough for what we’re doing. For the probe, I have a Tektronix P6139A 500MHz High-Z 10X 8.0pF passive probe connected and properly compensated.

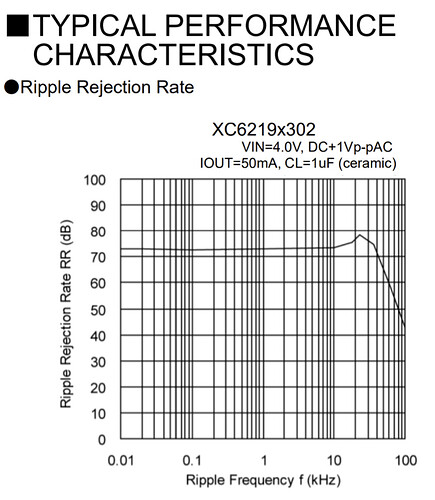

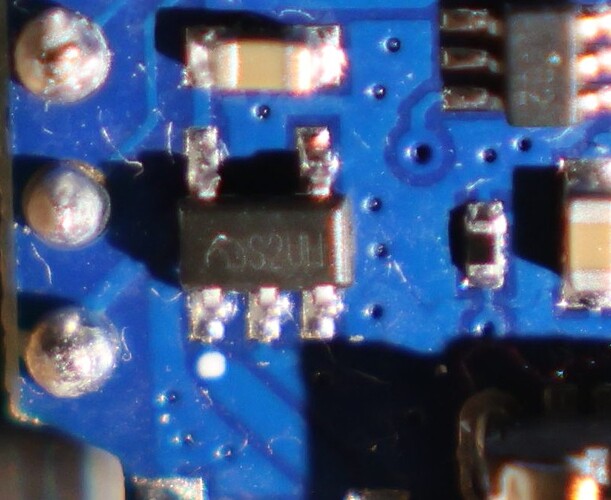

Last week, the LDO on my D1 Mini died (probably from my probing) and I had to wait for replacement clones from Amazon. It took about a week to get here. I suspect that LDO may be at the limit of its capability. From the marking and clues on https://github.com/bbqkees/Wemos-the-Clone-Wars/blob/master/README.md page, I think I may have a 150mA LDO. The replacement clones from Amazon seem to come with genuine MicrOne LDOs, but it’s not clear which one. The package marking shows the MicrOne logo and S2U??? Anyhow, I hope it’s got better than 150mA capability.

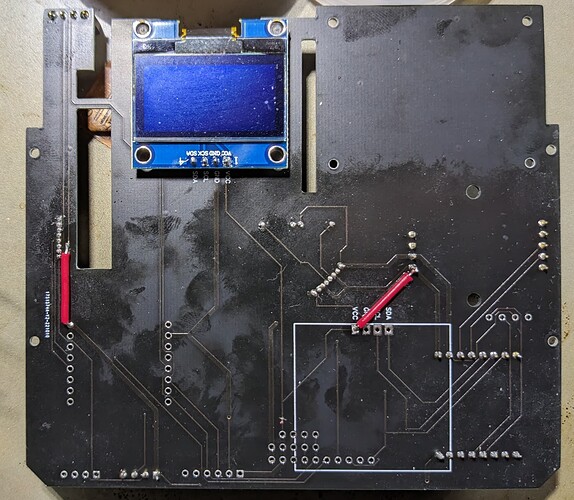

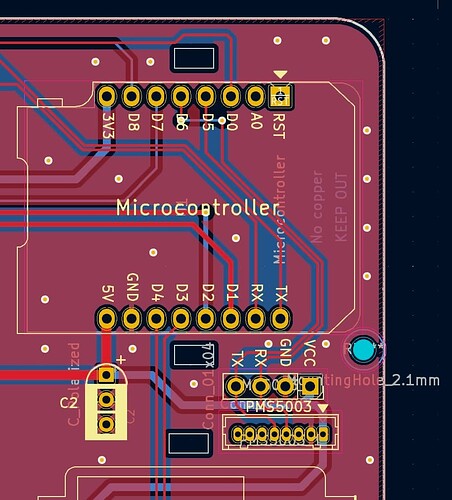

First of all, I’m not sure why I always thought the OLED on 5V, but apparently, I’m wrong. I don’t see why it’s not though, so I did some re-work on my v3.3 PCB:

For the SHT header, I cut the 5V trace and connected 3.3V. For the OLED, I cut the 3.3V trace and connected to 5V. Now the only thing on the 3.3V rail is the D1 Mini itself, the SHT and the SGP. The OLED, PMS, and S8 are all on 5V.

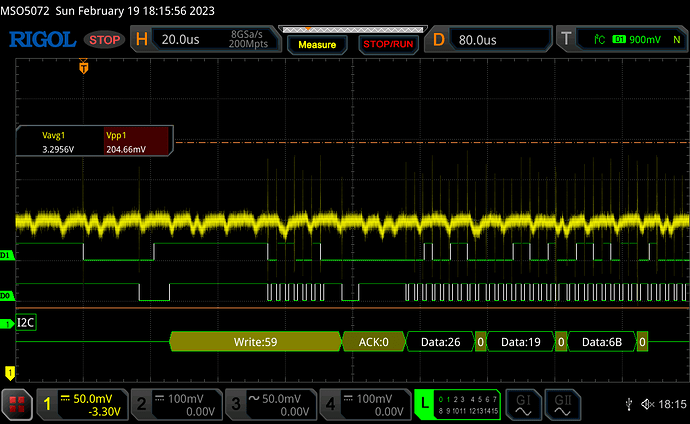

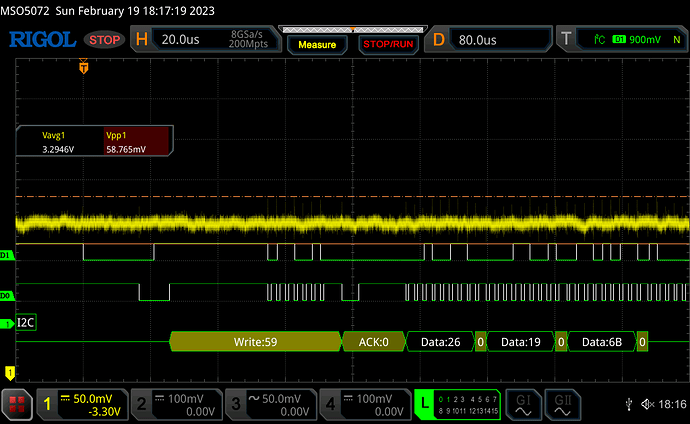

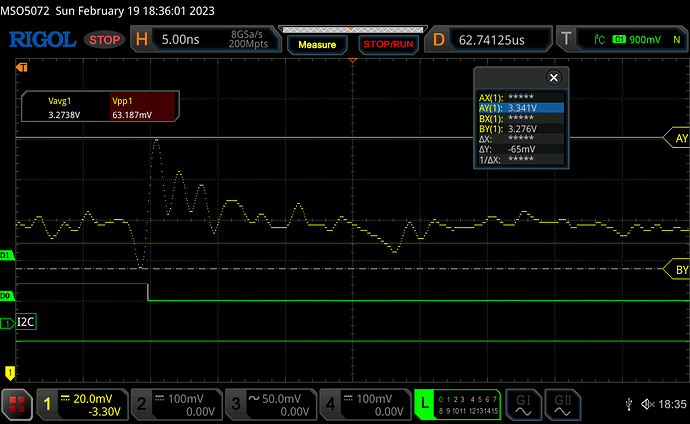

First, I measured 3.3V at the SGP module’s header pins, confirming about 200mVpp spikes aligning with I2C falling edges:

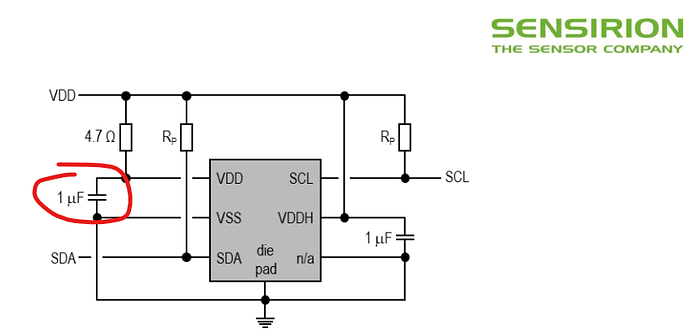

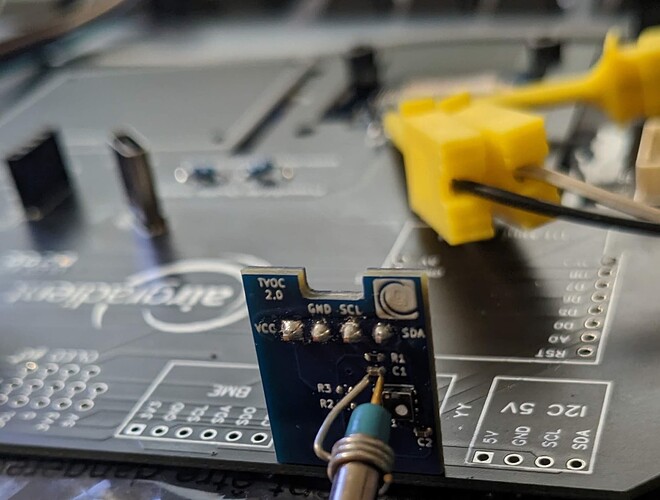

But proper supply measurements should be done at the decoupling capacitor with 20MHz bandwidth limit. The SGP module follows the recommended application circuit in the datasheet. So, I took measurements at the SGP41’s VDD decoupling capacitor (C1):

First, here’s the measurement without the BW limit:

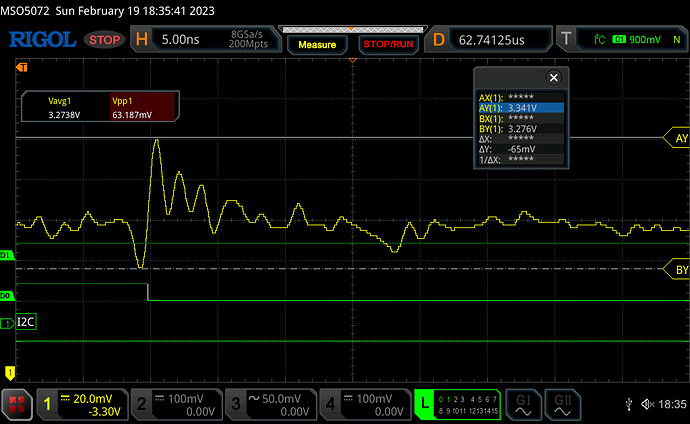

Only about 60mVpp at the decoupling capacitor. I picked one of the bigger spikes and zoomed-in:

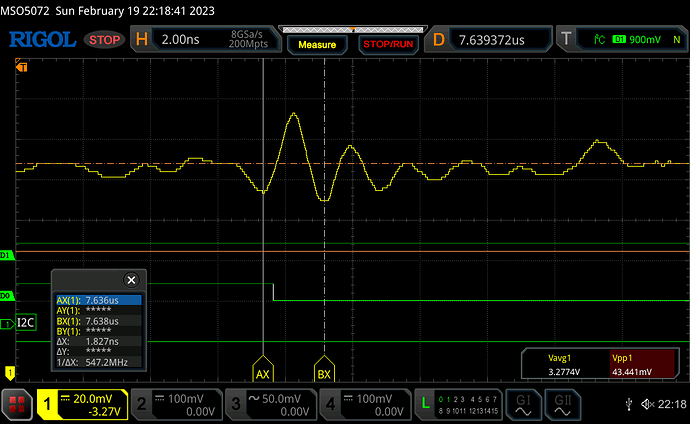

20mV/div is the limit of my scope (it goes into BW limit mode beyond that) and we can see the 8-bit quantization of the waveform. Looks to be roughly +40/-24 mV deviation. That’s only +1.2/-0.7% of 3.3V. But look at the rise time of that spike. It’s very high-frequency. The amplitude could be slightly greater as we might be nearing the bandwidth limits of my probe and scope. I was careful to maintain the full sampling rate of 8GSa/sec (0.125ns/sample period) to ensure we’re not aliasing here. To be sure, I turned on the dots display mode so you can see every sample:

Looked at more of these spikes and I would say it’s roughly in the 500MHz ballpark:

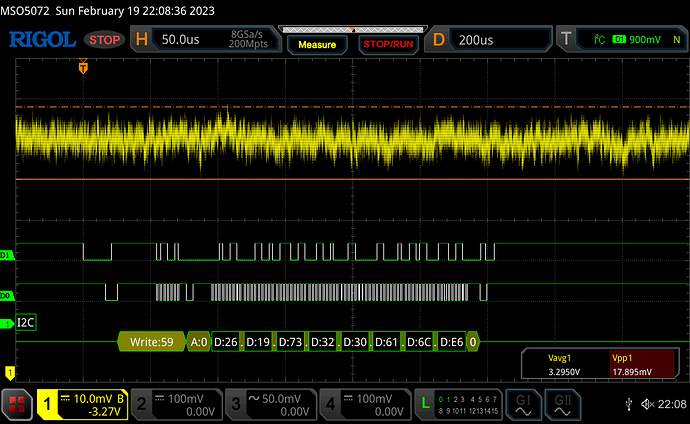

So, I took measurements with a 20MHz bandwidth limit on the scope channel:

The ~60mVpp dropped to <18mVpp:

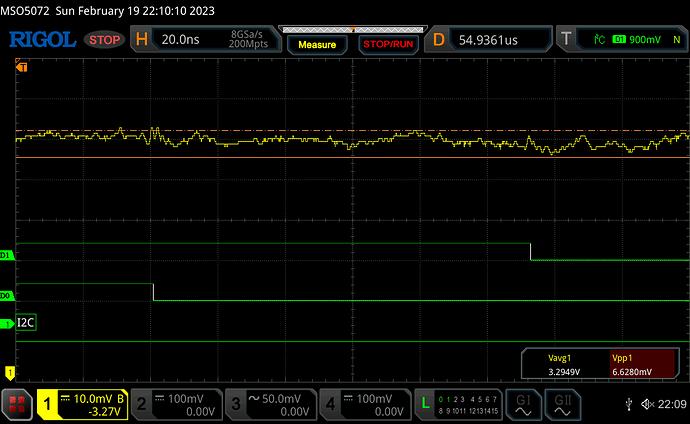

Zoomed in and I don’t see any effects aligned with falling edges:

Most of this is just the noise floor of my lower-end scope’s front-end. But either way, it’s looking pretty decent.

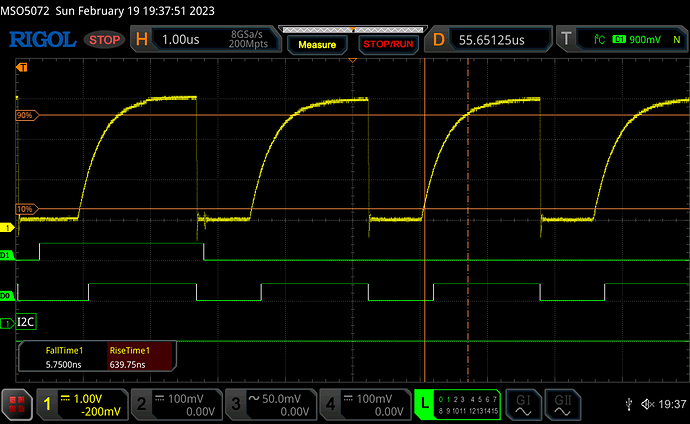

One concern was the rise times. Right now, my current configuration is unmodified so the SHT still has 10K pull-ups. And the OLED has 10K pull-ups. This gives me ~650ns rise times:

This is not meeting I2C fast mode requirement of 300ns. I think there’s no reason to run in fast mode. Reading a small number of sensors every few seconds and updating a 1-bit, low-res screen really doesn’t need anywhere near the data bandwidth of even standard mode I2C. Turns out u8g2 is running the bus at a default of 400kHz (fast mode). Back in 2018, someone asked (https://github.com/olikraus/u8g2/issues/705) why the bus speed was hard-coded and the u8g2 author was able to introduce a new function to set the bus clock rate: setBusClock(). So, I made a quick change to the Arduino sketch:

void setup()

{

Serial.begin(115200);

u8g2.setBusClock(100000);

u8g2.begin();

.

.

.

}

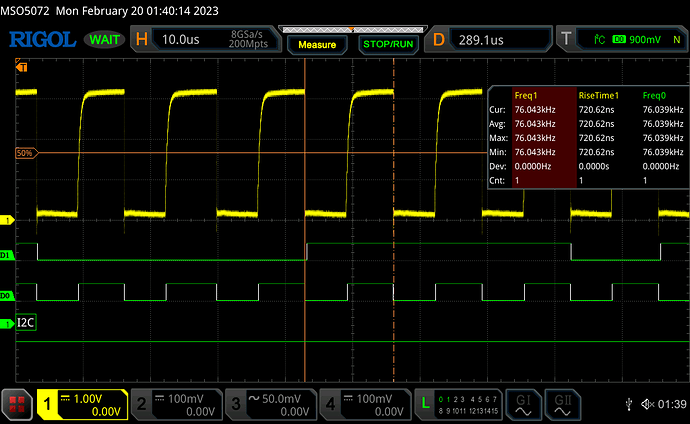

Strangely, this resulted in ~76kHz clocks rates:

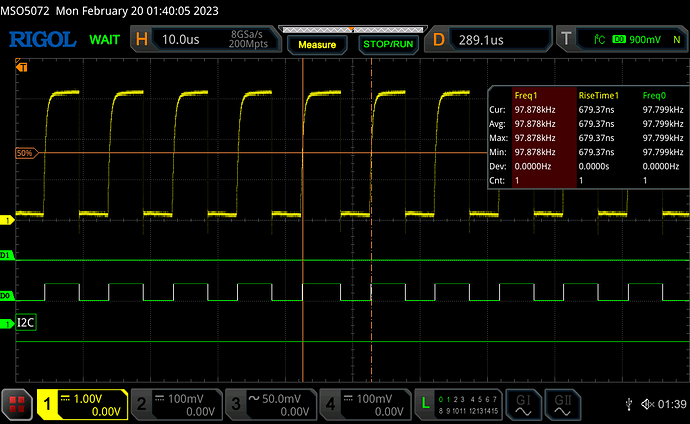

Setting it to 133kHz seems to be spot-on for 100kHz clock rates:

I then went ahead and tested a bunch of settings:

| Set (kHz) |

Actual |

% |

| 75 |

55.5 |

0.74 |

| 100 |

76 |

0.76 |

| 133 |

104 |

0.78 |

| 200 |

154.75 |

0.77 |

| 250 |

197.5 |

0.79 |

| 300 |

282 |

0.94 |

| 400 |

392 |

0.98 |

I know I2C clock speeds aren’t exact, but still seems strange to be so far off in the lower range – it’s not linear and there’s a major bend point around 300kHz. I created an issue on Github to inquire the reasoning behind this. For now, I have it set to 100kHz and let it run slower (~76kHz). Screen and all sensors work just fine. This thing is rock solid so far.